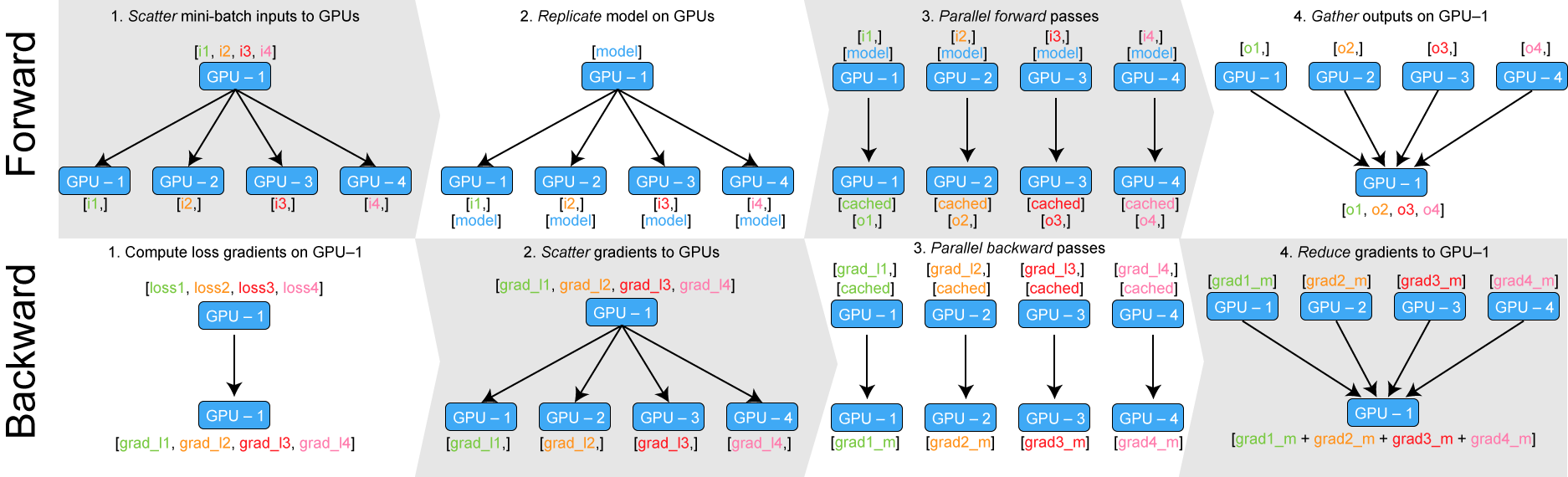

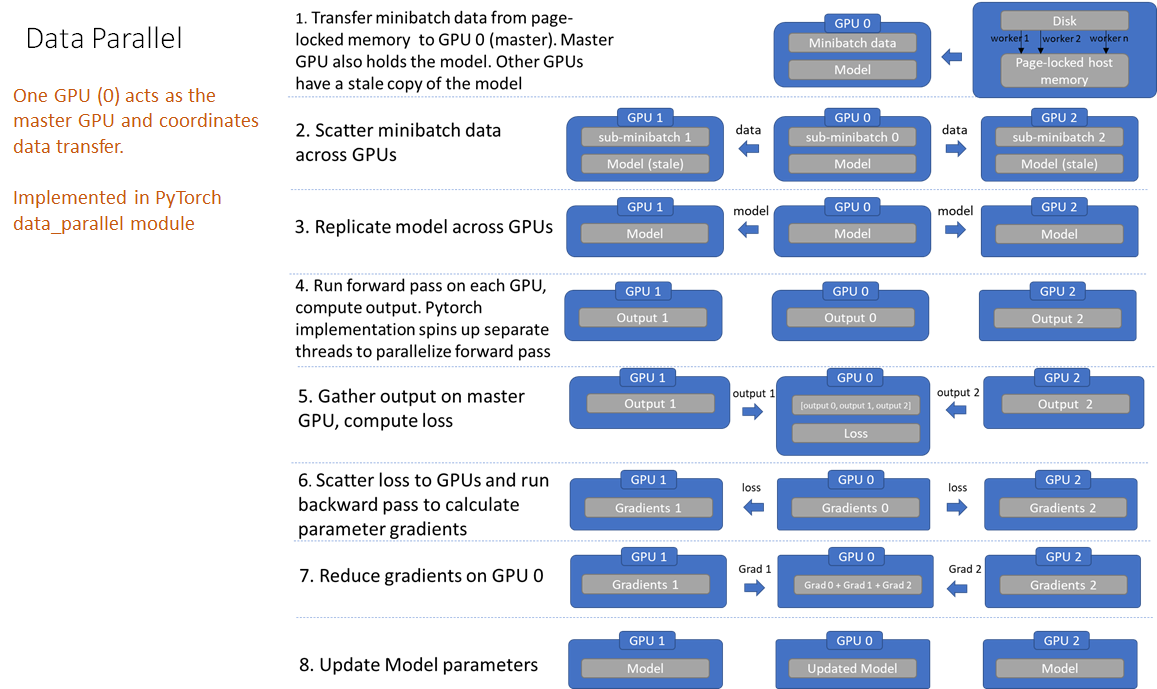

How distributed training works in Pytorch: distributed data-parallel and mixed-precision training | AI Summer

MONAI v0.3 brings GPU acceleration through Auto Mixed Precision (AMP), Distributed Data Parallelism (DDP), and new network architectures | by MONAI Medical Open Network for AI | PyTorch | Medium

Training Memory-Intensive Deep Learning Models with PyTorch's Distributed Data Parallel | Naga's Blog

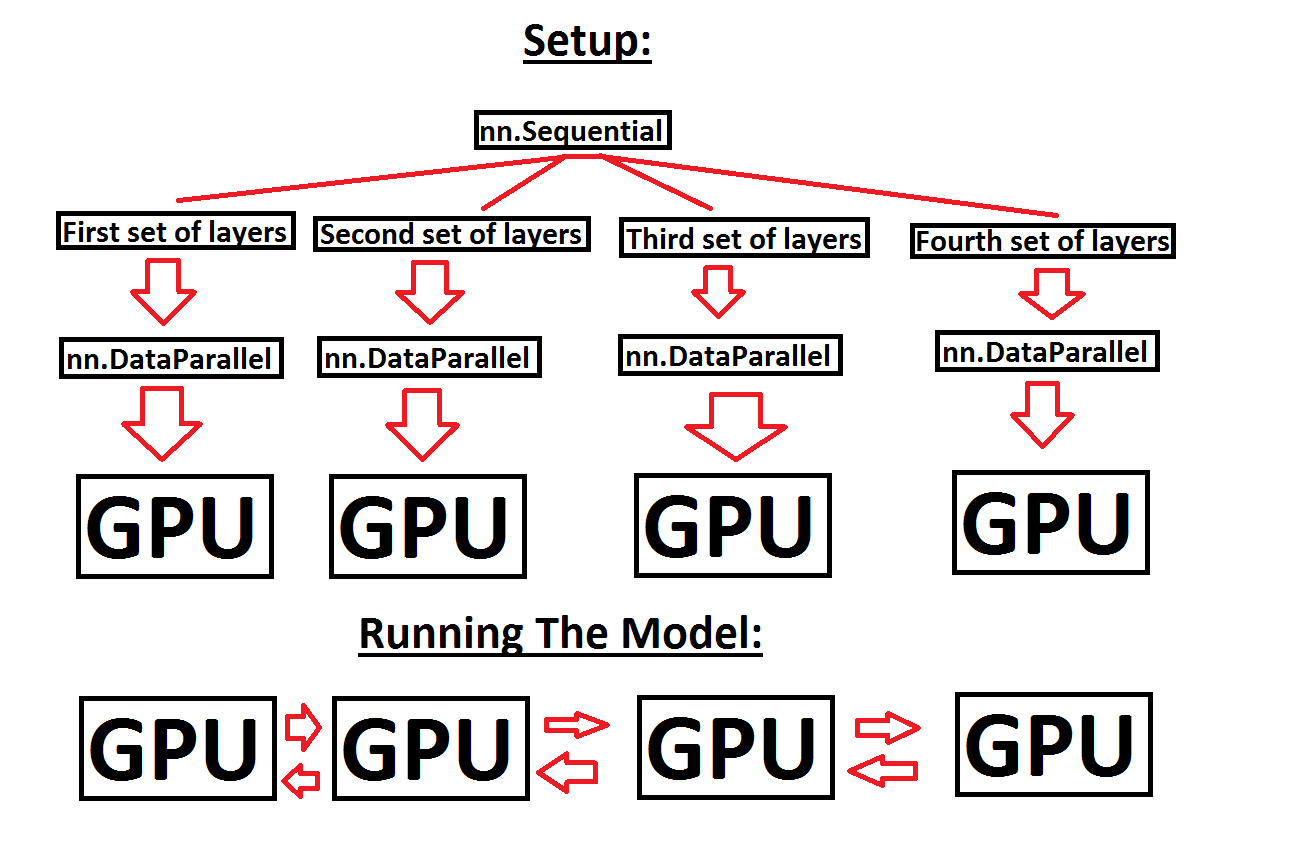

Help with running a sequential model across multiple GPUs, in order to make use of more GPU memory - PyTorch Forums

PyTorch on Twitter: "We're excited to announce support for GPU-accelerated PyTorch training on Mac! Now you can take advantage of Apple silicon GPUs to perform ML workflows like prototyping and fine-tuning. Learn

Announcing the NVIDIA NVTabular Open Beta with Multi-GPU Support and New Data Loaders | NVIDIA Technical Blog